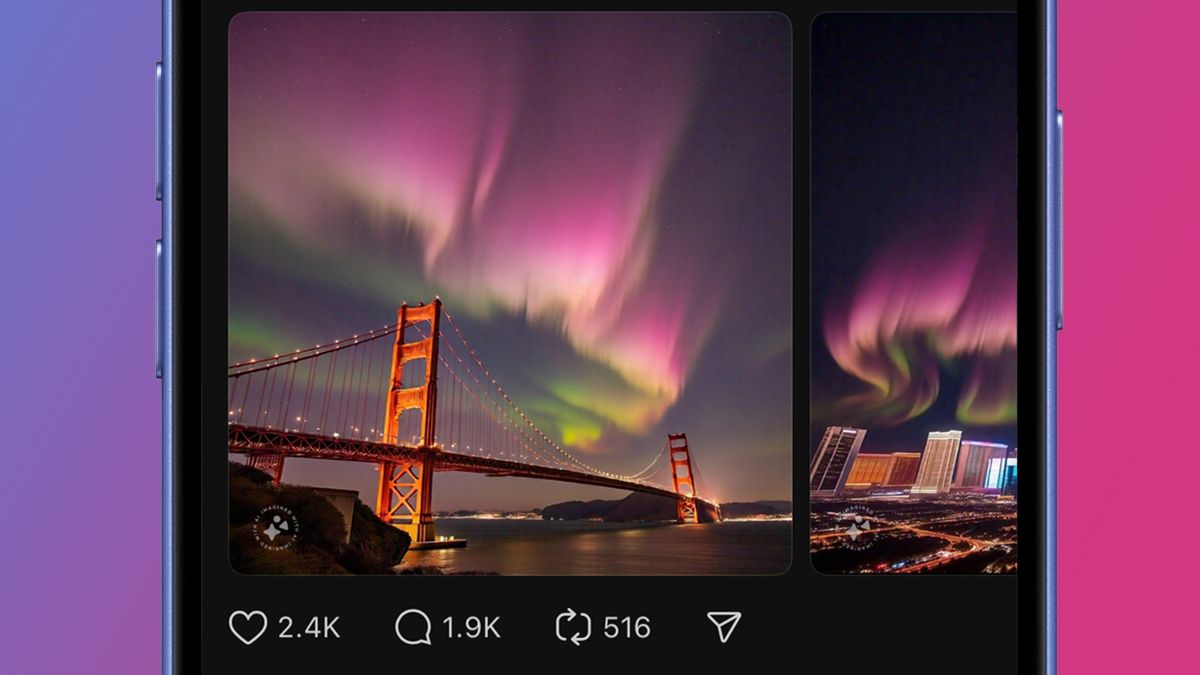

In recent discussions about the advancements in AI image generators, many individuals have expressed their concerns regarding the potential spread of misinformation and fake imagery. Notably, Meta’s recent post on Threads sparked criticism. The post titled “POV: you missed the Northern Lights IRL, so you made your own with Meta AI” suggested that those who did not witness the spectacular display could create AI-generated alternatives using Meta’s tools. Images depicting the Northern Lights over iconic landmarks like the Golden Gate Bridge and Las Vegas were included.

Meta has faced a significant backlash for this tone-deaf suggestion, with many users pointing out the implications of promoting such ideas. A comment from a user requested, “Please sell Instagram to someone who cares about photography.” At the same time, Kevin M. Gill, a software engineer at NASA, noted that images fabricated by AI like those presented by Meta “make our cultural intelligence worse.”

While it’s possible that this Threads post was an isolated incident rather than indicative of Meta’s overall stance on using their AI image generator, the implications remain troubling. On the one hand, generating creative images can be harmless and enjoyable; conversely, borrowed authenticity can mislead audiences into believing that fabricated images are authentic.

The core issue lies in the perception that Meta’s post encourages users to deceive their followers into thinking they witnessed a genuine event. This blurs ethical boundaries for many, especially when considering how misinformation can escalate around critical news events beyond the Northern Lights spectacle.

This raises a pertinent question: How does using AI-generated images compare to traditional photo editing tools? Is employing Photoshop’s Sky Replacement tool or Adobe’s Generative Fill any different from creating AI imagery?

Discussions surrounding generative AI often pivot on the theme of transparency. While the images produced by Meta were not inherently flawed, the insinuation that users could pass them off as personal experiences raises ethical concerns. Ensuring transparency about an image’s origins is a shared responsibility between tech companies and users alike.

Initiatives are emerging to establish standards for AI-generated content to combat this issue. For instance, Google Photos is reportedly testing new metadata features that indicate whether AI has generated an image. Simultaneously, Adobe’s Content Authenticity Initiative (CAI) aims to combat visual misinformation by implementing a metadata standard.

Recently, Google announced plans to adopt CAI guidelines to label AI images in search results. However, the slow adoption of such standards leaves many users navigating a murky landscape as AI image generators rapidly advance.

As we anticipate improvements in this sector, it becomes increasingly important for social media users to maintain transparency when sharing entirely AI-generated imagery. Tech giants must also avoid promoting misleading practices, as the potential for ethical misuse grows alongside technological advancements.

Discover more from Marki Mugan

Subscribe to get the latest posts sent to your email.